An SEO audit will help you establish a baseline of performance from which to start analyzing and measuring.

NB: This is an article from Milestone

The very first step is to set up or gain access to Google Search Console and Google Analytics or another analytics package.

Subscribe to our weekly newsletter and stay up to date

The audit can be broken into 5 parts:

- Crawling & Indexing

- Page Experience

- Clickability

- Content & Relevancy

- Local & Authority

Crawling & Indexing

Crawling assesses the ability of the search crawlers to access pages and indexing measures whether the page is placed in the knowledge graph and thereby ready to be included in search results.

Over the years, the search crawlers have become more proficient at crawling but the web gets bigger each day, so don’t make it harder for the crawlers to get around your site by being slow or giving confusing directions.

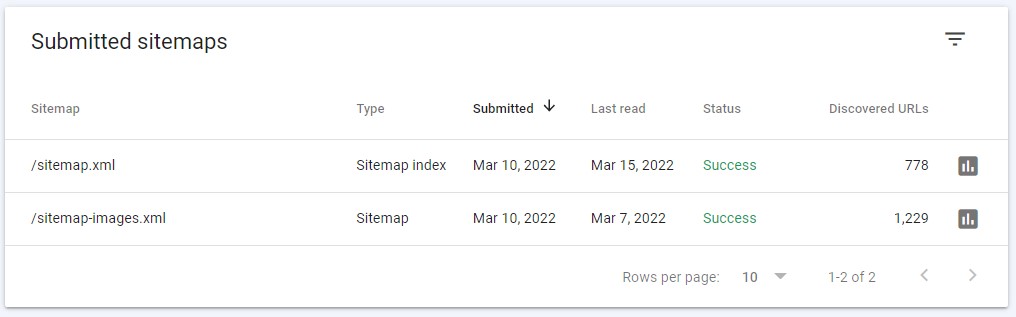

Check that you have a sitemap and place it in domain.com/sitemap.xml. This map should include all the pages you want crawled and make sure that your site is set to automatically update and append the sitemap whenever a page is added to the site.

This report shows when the sitemap was last crawled and how many disavowed urls it found. Disavowel is something you do to disassociate your site from spammy urls.

Some examples of pages you do not want in the sitemap are test and experiment pages where you want to control the traffic sources and sometimes landing pages that are purposefully lean on content. You do not want the search engines to prefer that landing page for an evergreen page on the same topic. Evergreen means the content has a long shelf life of years and will not go out of season in a few weeks or months, like a temporary offer.

Robots.txt is a text file that gives the crawlers instructions on where to go and what to crawl. It is fairly common for it to contain mistakes, outdated directives, or conflicting directions. Some common errors are to disallow crawling on a new site or site section when it is pre-deployment and then forget to change it to allow when it goes live. This could cause the site to fall out of the search results.

Check that GSC is indexing the right number and the right pages that you want exposed in the SERPs. If a particular URL is not indexed, confirm that is in the sitemap, is not disallowed, and the Inspect URL in GSC and request indexing.

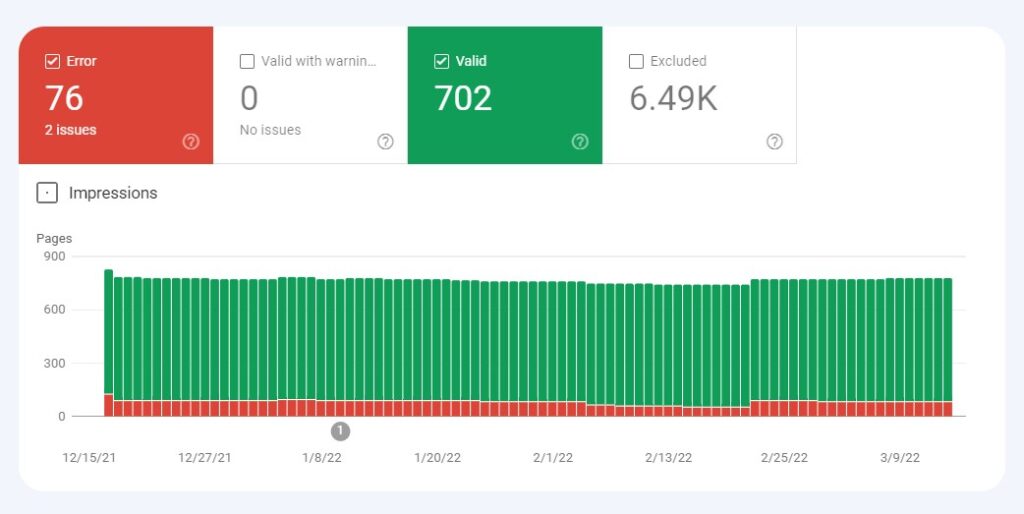

This report shows 702 crawled and indexed urls, 76 with issues or conflicts, and 6,490 urls excluded. This report is from a blog with more than 15 years of history, so the high number of exclusions is not an issue, but in a younger site would be something to look into.

The other way to exclude from indexing is to use a No Index metatag on the page. If these three elements are not aligned, they can send conflicting signals to the crawler that could make it abandon the crawl. For example, the robots.txt could allow a section, the sitemap could include the urls, but the page could have a no-index tag. A canonical tag is a suggest directive to Google to prioritize indexing of a different page over the current one and is the preferred way to resolve the conflict.

The architecture or taxonomy of the site should be straightforward and the urls should not be too long or include too many appended elements. This is less important than in the past, as Google has figured out how to crawl through sites despites some structural hurdles.

Another practice that helps the crawlers find and attribute value to pages are internal links. Menus and breadcrumbs link many of your site pages together, but internal links using meaningful anchor text (the linked word) add more meaning, context, and access for the crawlers. We recommend 3-5 in-text anchor links per page, especially among the blogs.

Page Experience

Fast-loading and stable pages are a benefit to all your site visitors, including the search crawlers. Pages with good page experience that load fast engage the humans and create efficiencies for the search crawlers, which are working on crawling the entire internet every 2 weeks or so.

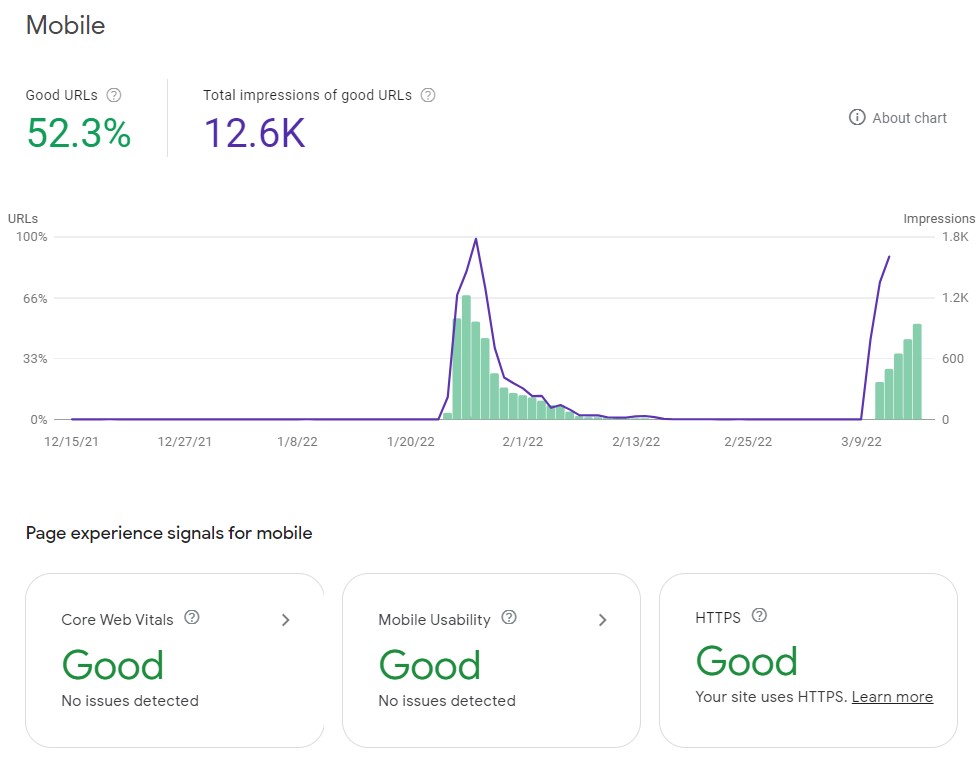

This report is mixed; it’s showing good by function but Good URLs at just over 50%. It’s a newer report in GSC and seems a bit buggy,

To enhance page load time, optimize image and videos to load fast, remove unused 3rd party javascript and other code and pixels, and remove unused fonts and elements from the CSS or design template. Avoid movement of elements as the page loads, which is known as Content Layout Shift.

Make sure your menus are clear and useful and the CTAs help people get to the next step in your website. Make your site not too deep in directories, to make it easier to find sections and navigate.

Find and eliminate 404 (not found) errors for assets and pages.

Clickability

Make your content clickable and maximize the CTR you get from your impressions in the SERPs.

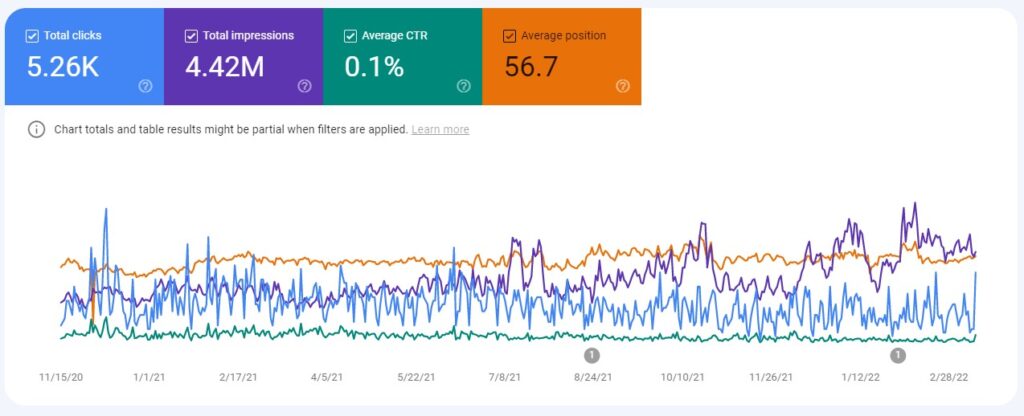

This is a favorite report in GSC and can give you much insight on performance. Use the filter to get branded and non-branded views to help with your SEO audit.

Do this with:

- Original, relevant, current, and useful information.

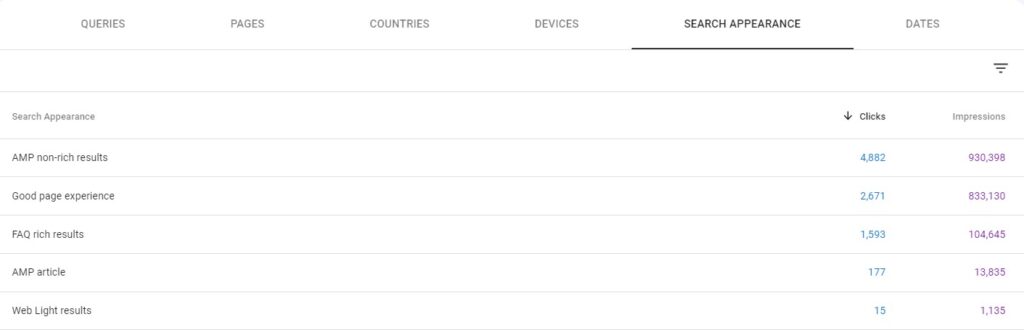

- Use the media types showing up in the SERPs, like images, FAQs, and local and knowledge panel elements. FAQs with schemas may give your FAQ rich results, which make your listing twice as tall and improve CTR.

- Use great meta titles and meta descriptions. Yeah, they are way less fun to work on then the actual content, but even after all these years, meta titles and meta descriptions still matter. Position your information as attractive without overhyping it. Use active verbs and promise value from reading or seeing the content. Be inviting and enticing.

Examples of practices that lead to low clickability are missing or duplicate tags. Google will often create tags for you from the page content if you do, but you are missing an opportunity to package your content and better control your clickability.

That will increase your clickability and traffic.

Content & Relevancy

When you look at your content you should think in terms of coverage and topics.

- What is the breadth of relevant topics you can address?

- What percent has been addressed?

- How is the visibility of that existing content?

- How are your competitors performing?

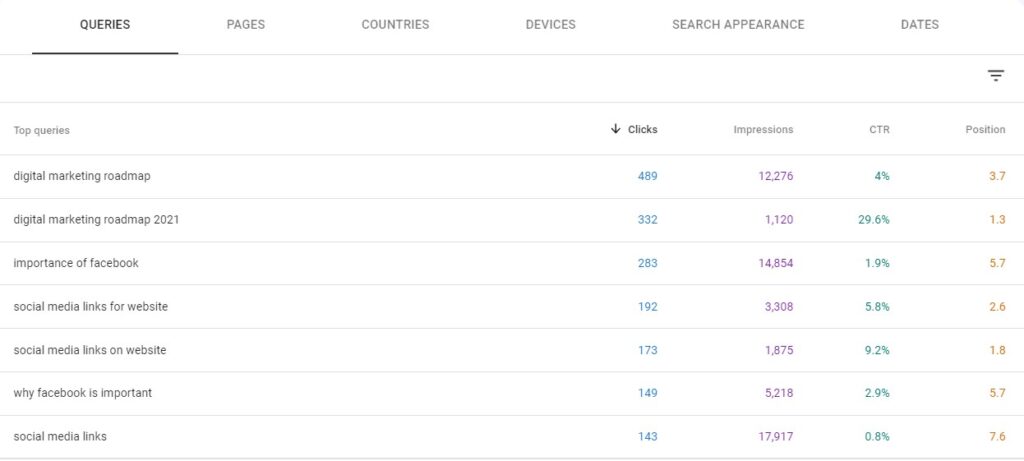

To perform these content analyses, you will need a content tool or platform. You can start with Google Search Console to see how your existing content covers and performs. To find new topic opportunities, you will want to use SEMRush or possibly Google Ads (on the paid search side). Google Analytics will tell you which pages are the most-used landing pages, with most traffic coming from search, but it will not tell you the exact topic or keyword.

Figure out a sustainable content publishing cadence, preferably above 2 blogs or pages per week and then plan out the writing in a content calendar.

In this section, look at your SERP coverage and what opportunities exist by media type. Based on that, you could add images, graphics, videos, text, FAQs with schemas, and reviews or the review widget from the review platform that helps the stars show up as rich media in the SERPs.

Local & Authority

As part of your SEO audit, make sure your Google My Business profile is as complete and accurate as possible. Look for incoming questions and answer them before unrelated third parties answer for you. A complete local profile will improve the data in your knowledge panel on branded searches.

Check that all sites where you are listed use the exact same URL, Name, Address, and Phone Number. There are millions of companies out there and consistent UNAP helps disambiguate your business from others.

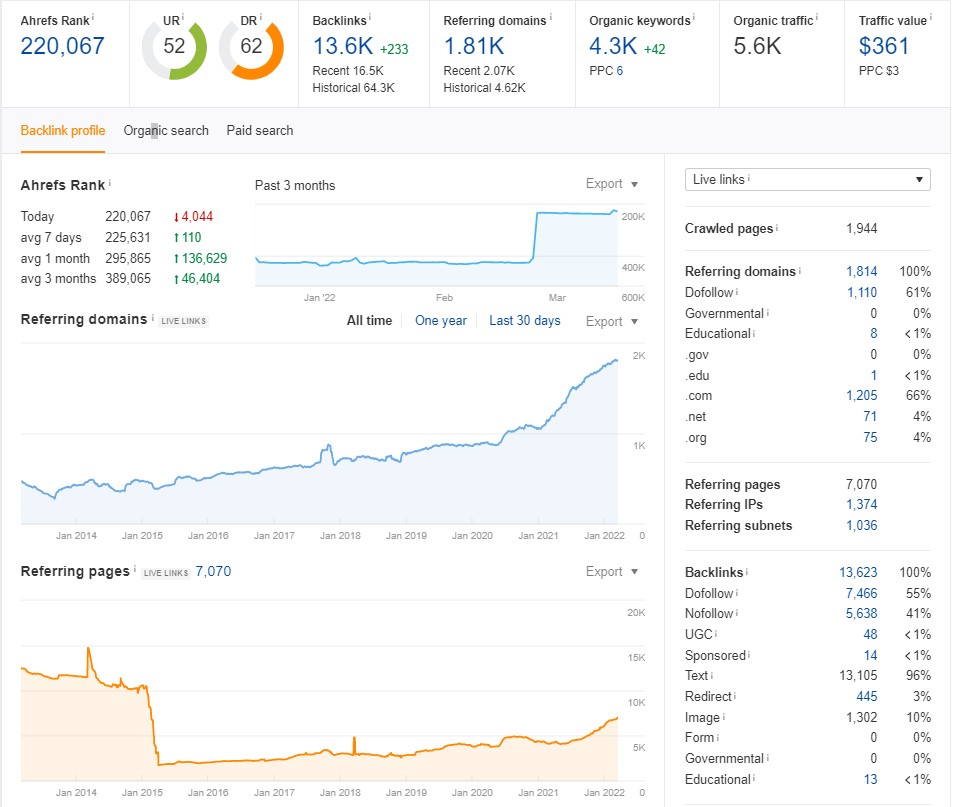

Authority for your website is established through mentions and backlinks to your site. Securing backlinks requires all of the techniques mentioned above. Create great, unique content that people want to reference and link to. You can check your Link profile in Google Search Console and by using AHREFs. Look for a pattern of steady link growth on both a domain and page basis.

Though social media sites have no-follow link settings, being very active with outstanding content in social media will help you increase your audience and they might link to your content from a website or a blog.

Getting links from the biggest and most authoritative sites, universities, and government organizations will give you most lift in authority. Doing this might correlate to partner agreements, joint research, press coverage.

So that is an introduction to how to do your own free SEO audit.